-

NIF 2.0

All documentation has been moved to http://persistence.uni-leipzig.org/nlp2rdf/. -

Mailing List

If you are interested in NLP2RDF, you can write an email to the nlp2rdf discussion list or sign up directly below: -

NLP2RDF Funding and Cooperations

-

Categories

- Implementations (6)

- News (1)

- Tutorial Challenges (3)

- Tutorials (1)

- Uncategorized (3)

-

Meta

- Entries RSS

- Comments RSS

- WordPress.org

-

Latest Posts:

Avoid AI Detection: Why It’s Unreliable and Better Alternatives

You’re probably starting to wonder how much you can trust those AI detection tools everyone seems to talk about. They promise to sniff out artificial content, but they often get it wrong—sometimes flagging genuine work and missing the fake. If you’re relying on them for accuracy or fairness, you could be risking more than you realize. There’s a bigger issue beneath the surface, one that could affect how we define originality itself.

The Accuracy Problem: Why AI Detection Fails

AI detection tools are designed to identify writing generated by machine algorithms; however, their effectiveness has faced significant criticism. One major issue is the occurrence of false positives, where authentic human-generated content is incorrectly flagged as AI-generated. This challenge has been evident enough that OpenAI has opted to discontinue its own detection tool.

The performance of many AI detection systems often aligns closely with random guessing, particularly when attempting to distinguish between varied or distinctive writing styles. Such inaccuracies can lead to credibility concerns in academic and professional contexts, particularly for non-native English speakers who may be more susceptible to misclassification.

Relying on these imperfect AI detection tools can result in unwarranted scrutiny, highlighting the need for caution when interpreting their outputs.

Bias and Unfairness in AI Content Detectors

AI content detectors present notable challenges, particularly in their potential for introducing bias against certain groups of writers.

Non-native English speakers and individuals with distinctive writing styles may experience higher rates of false positives, where their work is incorrectly identified as being AI-generated. This issue is significant as it disproportionately affects English as a Second Language (ESL) writers and neurodiverse individuals, potentially leading to unfair penalties for their unique expression.

The presence of high false positive rates not only undermines trust in these tools but also creates tangible obstacles for those impacted by such misclassifications. Consequently, the reliance on biased AI detectors can hinder efforts to establish a fair and equitable writing environment.

The Impact on Education and Academic Integrity

The increasing implementation of AI detection tools in academic environments has emerged as a significant factor affecting education and academic integrity. As these tools become widespread, concerns about their accuracy and impact on trust have been raised. Research indicates that approximately 4% of human-written sentences may be inaccurately identified as AI-generated, which can lead to detrimental effects on the educational experience, particularly for English as a Second Language (ESL) students. This demographic may face heightened risks of being unjustly penalized, contributing to existing educational disparities.

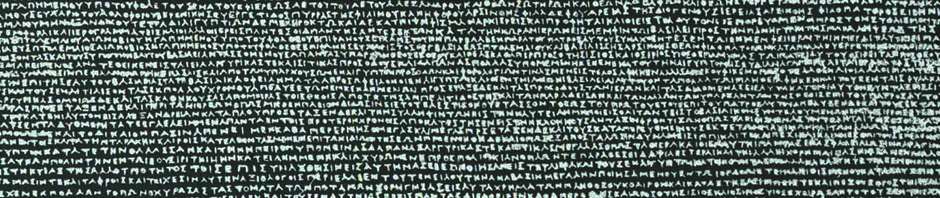

Furthermore, the applicability of AI detection tools to historical texts and classic literature introduces additional complications. Their activation on such materials raises critical questions regarding the reliability of these detection systems.

Instead of promoting genuine academic integrity, the pervasive use of these technologies may generate doubt and suspicion among students and instructors, ultimately inhibiting creativity and damaging the educational relationship within institutions.

Therefore, careful consideration and further examination of AI detection implementations are warranted to ensure they align with the values of fair assessment and academic collaboration.

Misleading Results: False Positives and Negatives

Misidentification by AI detection tools presents significant challenges for both students and educators. These tools can produce false positives, where human-written text is incorrectly classified as AI-generated. Notable examples include classic literature such as "Jack and the Beanstalk" and religious texts like the Bible, which have been misidentified by these systems.

Additionally, non-native English speakers may face increased rates of misidentification, with studies indicating they're 2 to 3 times more likely to be mislabeled as AI-generated.

Conversely, false negatives occur when AI-generated content goes undetected. This issue raises concerns regarding the reliability of detection systems, as inaccuracies can undermine trust in their effectiveness.

The consequences of these misclassifications can discourage diverse writing styles and diminish the educational experience. Therefore, it's essential to recognize that relying solely on AI detection tools may not provide a dependable or equitable solution for assessing written content.

Google’s Approach to AI-Generated Content

Google’s stance on AI-generated content emphasizes the importance of quality over the method of content creation. According to their policy, utilizing AI to generate content isn't penalized, provided that the content meets high-quality standards and doesn't attempt to manipulate search rankings.

Google's evaluation criteria include E-E-A-T—expertise, experience, authoritativeness, and trustworthiness—indicating that both human and AI-generated content can be recognized if it delivers real value to users.

Instead of concentrating solely on AI detection, content creators are encouraged to prioritize the development of original and useful material. This approach suggests that the emphasis should be on addressing user needs and producing content that's informative and authentic, irrespective of whether it's created by humans or AI.

Testing Popular AI Detection Tools: What We Found

An analysis of popular AI detection tools reveals notable concerns regarding their accuracy.

Testing conducted on platforms such as Originality.AI and Turnitin indicates that these tools often produce unreliable results; for instance, Turnitin has been documented to incorrectly flag 1 in every 25 human-written sentences.

Additionally, Merlin has shown a tendency to misidentify AI-generated content as human-written.

While Pangram Labs demonstrated a higher level of accuracy compared to others, the majority of AI detection tools have performed only slightly better than random chance, particularly when assessing non-traditional forms of writing.

These observations underline the challenges and limitations inherent in using AI detection tools for accurately distinguishing between sources of text, revealing a significant degree of unreliability in many current options.

Smarter Approaches to Managing AI in Content

The inaccuracies and biases identified in many AI detection tools indicate a need for a more nuanced approach to managing AI in content creation. Sole dependence on AI detection software can lead to the misidentification of authentic work as AI-generated, disproportionately affecting non-native English speakers.

A more effective strategy involves promoting ethical AI practices that ensure responsible use of AI-generated content. Redesigning assignments to encourage originality and creativity can reduce the likelihood of automation impacting the quality of work.

Additionally, investing in AI automation and management platforms that emphasize transparency and quality control may yield better outcomes. By integrating AI in a calculated manner, organizations can enhance content quality while maintaining trust and fairness, offering a comprehensive approach that goes beyond what detection technologies can achieve.

Building Trust With Transparent Policies and Ethical Use

Establishing transparent policies around the use of artificial intelligence (AI) is crucial in educational environments, where trust is foundational.

Clear and accessible guidelines, communicated both verbally and in written form, help set expectations that promote ethical use and uphold academic integrity. Discussing permissible and impermissible uses of AI encourages transparency and engagement among students, which can reinforce trust in the educational institution.

Incorporating specific AI policies into syllabi allows students to easily reference the rules and understand the consequences of their actions. This approach supports a structured understanding of what constitutes acceptable use.

Furthermore, emphasizing original thinking and diverse assessment methods fosters an atmosphere focused on the genuine objectives of learning. This prioritization of ethical use over reliance on detection methods can enhance the educational experience by encouraging critical thinking and personal development.

Conclusion

You can’t rely on AI detection tools to judge your work fairly—they’re just too unpredictable and biased. Instead of stressing over these flawed systems, focus on developing your critical thinking and originality. When you prioritize genuine learning and honest expression, you’ll build real skills and integrity. Choose environments that value trust, transparency, and ethical use over unreliable tech. Ultimately, you deserve to be measured by your own abilities, not by imperfect algorithms.